A pilot virtual case-management intervention for caregivers of persons with Alzheimer’s disease

Introduction

In 2015, informal, unpaid caregivers of persons with Alzheimer’s disease (AD) provided 18.1 billion hours of care valued at $223.1 billion (1). If AD caregivers are not properly supported, they may lose their ability to provide care, shifting this expense to the formal healthcare sectors. Given that AD caregivers suffer negative physical and mental health outcomes tied to their caregiving role (2-4), it is imperative that supports addressing these issues be in place to allow them to continue in their roles as caregivers in the informal sector.

With this in mind, we have carried out a series of studies under the “Health e-Brain Study” initiative, aimed at characterizing in particular the cognitive and emotional states of AD caregivers with the ultimate goal of implementing an intervention meant to address any negative outcomes. The first phase of the project established a virtual cohort of caregivers and showed (I) that their performance on a number of cognitive evaluations is significantly worse than demographically matched non-caregiver controls and (II) that this performance is related to a number of social and quantitative variables such as hours of care provided, perceived social support, stress, etc. (5). A second study recruited unpaid AD caregivers and showed that (I) the majority had high levels of caregiver burden and (II) found that degree of burden is positively associated with measures of depression, anxiety, and impaired sleep quality (6). This latter study suggested that caregivers with Internet and smartphone access might be well-suited to receive caregiver support services delivered via mobile devices that target these issues.

This report summarizes our pilot implementation of a telehealth intervention, which combined technology-enabled case management services with repeated feedback regarding cognitive functioning to provide support to caregivers with evidence of high caregiver burden and psychosocial distress. Informal (family) caregivers with high caregiver burden and moderate behavioral health symptoms were recruited and referred to Mindoula, a virtual case-management platform that provides emotional and community support to individuals experiencing a range of behavioral health symptoms.

Methods

The current pilot enrolled a screening sample of 265 informal, unpaid AD caregivers between the ages of 45–75 years who reported using a smartphone, the ability to speak and read English, and who had Internet access. AD caregivers were recruited through the outreach of partner organizations [Bright Focus Foundation, Geoffrey Beene Foundation Alzheimer’s Initiative, Us Against Alzheimer’s, and the Patient-Centered Outcomes Research Institute Alzheimer’s and Dementia Patient and Caregiver Powered Research Network (PCORI AD-PCPRN; a coalition of 43 partner institutions) as well as the study’s website (http://www.health-ebrainstudy.org). Once potential participants either accessed the website directly or were directed to it, they were provided with a consent form which they signed electronically before moving onto data collection. Individuals enrolled and completed a questionnaire soliciting demographic information as well as responses to three behavioral questionnaires: the M3, a risk-screening instrument for depression, anxiety, bipolar disorder, and posttraumatic stress disorder (PTSD) (7), the Zarit Burden Interview, an assessment of caregiver burden (8), the Patient Health Questionnaire 9 (PHQ-9), a screening instrument for depression, and the Patient-Reported Outcomes Measurement Information System (PROMIS) form, an assessment of sleep quality (9). The results of these surveys were previously published for an initial screening sample of 165 participants (6).

Based on responses to these items, participants were invited to join the main study if they met more specific inclusion/exclusion criteria related to the behavioral health assessments. In particular, participants were eligible if their initial Zarit Burden Interview scores were 21 or higher and had an overall (cumulative) M3 score between 20 and 53 (indicating moderate risk for behavioral health issues). Finally, potential participants were excluded if they reported color blindness, had a cognitive diagnosis (e.g., traumatic brain injury, dementia, etc.), or scored in the “high risk” range for any M3 subscale.

Over a 3-month period, participants utilized the Mindoula case management service via a smartphone application while completing regularly scheduled behavioral assessments. The Mindoula platform is designed to provide individuals with moderate behavioral difficulties real-time support from an assigned case manager (10). Participants are able to interact with their case manager via secure text messaging and/or phone conversations. Additionally, the platform includes a “check-in” feature, a brief, three-item survey providing participants a means of self-reporting performance on their perceptions of overall affect, productivity, and sleep quality to facilitate information sharing with case managers. Based on participant-specific information obtained from Mindoula’s intake procedure, case managers identified care modalities that could be utilized during the study period (e.g., concrete service delivery, emotional support, education, etc.).

To create a baseline behavioral health profile, each participant was re-administered the M3 and the PHQ-9 as a part of Mindoula’s intake procedure. Baseline data on the Zarit Burden Interview and the PROMIS sleep survey were derived from initial screening responses as described above. Participants were instructed to re-take the M3, Zarit Burden Interview, and the PROMIS sleep survey during the study period at weeks four and twelve. At the end of the study period, participants were re-assessed on all of these instruments for final outcomes.

Finally, participants also received digital automated neurocognitive assessment (DANA), a smartphone- or tablet-based FDA-cleared neurocognitive assessment tool, as a means of tracking any changes in cognitive performance over the study period. Participants were instructed to take DANA Brain Vital, a five-minute suite of three neurocognitive tests, a minimum of once per week for a total of at least 12 administrations. Table 1 describes the subtests in DANA Brain Vital. DANA results are measured in throughput, a unit that combines speed and accuracy.

Table 1

| DANA subtest | Description |

|---|---|

| SRT | The subject taps an orange target symbol as quickly as possible each time it appears. The location and shape of the stimulus does not vary from trial to trial. |

| PRT | The screen displays one of four numbers (1, 2, 3, or 4) for 2 seconds. The subject taps the left button (“2 or 3”) or right button (“3 or 4”) as quickly as possible to indicate which category corresponds to the number displayed. |

| GNG | A building is presented on the screen with several windows. Either a “friend” (green alien) or “foe” (gray alien) appears in a window. The subject must tap the “blast” button as quickly as possible only when a “foe” appears. |

DANA, digital automated neurocognitive assessment; SRT, simple reaction time; PRT, procedural reaction time; GNG, go/no-go.

The study protocol was approved by the AnthroTronix Institutional Review Board (#091614A).

Results

The screening sample of 265 participants evidenced similar behavioral health profiles to the smaller sample previously published. with a mean Zarit Burden Interview score of 44.3, a mean PROMIS score of 26.9, a mean M3 depression score of 7.1, and a mean M3 anxiety score of 7.2. Twenty participants who met the inclusion/exclusion criteria for this pilot accepted the invitation to join the pilot and underwent Mindoula’s intake procedure. Of these, 13 participants completed post-intervention measures, and their data form the basis of the analyses that follow.

The final sample included 12 females and one male with a mean age of 53.8 [standard deviation (SD): 6.7]. The majority of the sample (~80%) had been providing care for their AD relative for 1 to 3 years, with the remainder having provided care for 4 to 6 years (~15%) or less than 1 year (~8%). Twenty-three percent reported providing care for less than 10 hours a week, with roughly 38% providing more than 100 hours of care per week. The majority of participants (62%) reported providing care for a parent, with 23% caring for an “other relative” and 15% caring for a spouse or partner.

Pre- and post-measures

Pre- and post-intervention scores for the PHQ-9, M3, Zarit Burden Interview, and PROMIS sleep disturbance form are summarized in Table 2. Of the measures that can be interpreted in terms of clinical categories, the PHQ-9 and Zarit, pre-intervention mean scores for participants indicated mild depression (8.8) and “moderate-to-severe” burden (46.7), respectively. Post-intervention scores on these instruments were seen to decrease: the final PHQ-9 mean score was 4.2, indicating minimal or no depression, while the final mean Zarit Burden Interview score was 40.8, still indicative of “moderate-to-severe” burden but close to the “mild-to-moderate” cut-point of 40. Post-intervention numerical decreases on the measures without categorical structures, mean overall M3 score and mean PROMIS score, were observed as well.

Table 2

| Assessment | Pre-score | Post-score | V | P |

|---|---|---|---|---|

| PHQ-9 | 8.8 (3.6) | 4.2 (3.4) | 84 | <0.01* |

| M3 | 26.8 (9.7) | 18.9 (11.6) | 68.5 | 0.12 |

| Zarit | 46.7 (11.8) | 40.8 (12.6) | 76.5 | <0.01* |

| PROMIS | 21.3 (6.4) | 18.8 (6.9) | 59.5 | 0.34 |

*, significant at the 99% confidence level. SD, standard deviation; PHQ-9, Patient Health Questionnaire 9; PROMIS, Patient-Reported Outcomes Measurement Information System.

Mean pre/post differences for each instrument were formally tested with Wilcoxon signed-rank tests, a nonparametric analog of the paired t-test. Mean score changes for the PHQ-9 and Zarit Burden Interview were significant at the 95% confidence level (PHQ-9: V =84; P<0.01; Zarit: V =76.5, P<0.01); however, tests of mean differences for overall M3 and PROMIS scores did not reach significance (M3: V =68.5, P=0.12; PROMIS: V =59.5, P=0.34). As a follow-up analysis on M3 scores, we also tested for decreases in the depression and anxiety sub-scores, but neither reached significance despite downward numerical trends.

While not a core component of this study, of interest is whether cognitive performance showed any appreciable change from study start-date to end-date. To address this question, a multilevel model with time as a predictor and intercepts estimated for each subject was constructed for each DANA subtest. Only subtests where at least 66% of trials were correctly completed were considered as valid; invalid assessments were discarded from the dataset. No slope coefficient for any test reached significance at the 95% confidence level [simple reaction time (SRT): b =−0.03, P=0.25; procedural reaction time (PRT): b =0.03, P=0.10; go/no-go (GNG): b =0.02, P=0.40], so we cannot conclude that throughput meaningfully changed as a function of time-in-study.

Engagement measures

We also collected data related to general engagement with the intervention from both Mindoula-specific modalities (number of messages sent and received, number of “check-ins,” and number of calls) as well as opportunities to self-administer DANA (Table 3). Participants sent an average of 51.3 messages, made an average of three phone calls, and completed an average of 22.7 “check-ins”. Across the sample, DANA was taken an average of 11.3 times over the course of the study period.

Table 3

| Engagement metrics | Mean (SD) |

|---|---|

| Messages received | 73.0 (38.8) |

| Messages sent | 51.3 (31.7) |

| Check-ins | 22.7 (24.3) |

| Calls | 3 (3.6) |

| Times DANA taken | 11.3 (4.9) |

DANA, digital automated neurocognitive assessment; SD, standard deviation.

Additionally, Mindoula case managers provided case summaries for each participant based on their notes and observations. A qualitative review of these summaries shows evidence that the majority of participants (11/13) used the service primarily for emotional support. Over half of the participants (8/13) asked for help finding resources such as information on support groups, respite care, in-home supports, and day programs, and seven desired assistance with self-care. All participants reported good satisfaction with the intervention, and at the end of the study period, seven participants elected to continue with a complementary extension of Mindoula services. Finally, four participants are recorded as having mentioned some benefit from the DANA administrations. For example, one participant noted their perception of a relationship between sleep quality and cognitive performance.

Discussion

This pilot study examined the feasibility a novel technology-enabled case management intervention. Lacking a multi-arm randomized design, we cannot attribute any outcomes solely to the efficacy of the intervention, but a number of promising observations related to behavioral health outcomes and qualitative metrics suggest that a full-scale, randomized controlled study is warranted.

The pre/post survey data show that, in the aggregate, participants experienced numerical decreases on measures related to caregiver burden, depression, anxiety, and sleep quality, suggesting symptom improvement. Exploratory analyses of pre/post PHQ-9 and Zarit Burden interview showed that these differences are statistically reliable, but we note that our sample size was small and thus substantive claims regarding symptom improvement should be reserved for results obtained from a better-powered study.

Regarding overall engagement with the intervention technologies, we found that active utilization by participants was generally good. Quantitative engagement metrics indicate that participant-case manager interaction was both frequent and inclusive of all interaction modalities. We observed that some participants tended to favor one modality over others (e.g., a preference for text messaging over phone calls), indicating that the platform is flexible enough to adapt to individual communication preferences. In addition, participants’ substantive utilization of the case management service was similarly diverse, with support topics covering emotional well-being, practical help, education, etc.

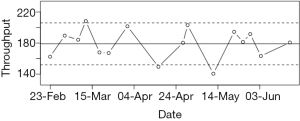

Some participants engaged actively with the DANA cognitive assessments as yet another avenue of interaction with their case managers indicating a potential expansion of DANA’s role in future studies. In particular, we propose a “red-flag” evaluation system whereby individual neurocognitive assessments could be categorized according to whether they are inside or outside of the normal range for a given subject. Figure 1 illustrates one possible implementation, showing a participant’s longitudinal results from the Simple Reaction Time subtest along with 80% prediction bands, which limit the expected range of “normal” performance on the basis of previous performance. If an administration falls outside of this range (as some do in Figure 1), this would trigger a “red flag” to the case manager, allowing them to evaluate whether or not such aberrations in cognitive performance are of concern and warrant action. The overarching goal of such a system would be to allow case managers more insight into participants’ overall health profiles.

In any mHealth intervention, a seamless user experience is critical to success, and certain features of this study may have contributed to the poor completion rate (65%). We note that of the 20 initial subjects, final data exist for only 13 of those. The study utilized multiple collection media (e.g., two smartphone applications, multiple websites for survey data, etc.) and relatively time-consuming tasks. This may have increased burden on an already characteristically burdened sample. Thus, future studies should attempt to streamline data collection to make participation less burdensome.

Finally, we note that our results can be extended to policy considerations, particularly in the context of the growing awareness of the benefits of technology-enabled supports and services. A recent National Academy of Medicine report emphasized not only the well-recognized needs of caregivers, but also the evidence that technology-based interventions, such as the one proposed here, can uniquely address such needs (11). In the context of the present study, we have demonstrated that such technology-enabled platforms are capable of providing support and concrete services that patients have traditionally only been accessible via limited face-to-face resources. Our pilot’s inclusion of a focus on computerized cognitive testing is a promising addition to a technological platform as well, since the neurocognitive symptoms characteristic of the caregiving population are traditionally evaluated with time consuming, pencil-and-paper-based assessments. DANA can be remotely administered and automatically scored, facilitating cognitive assessment as a component of a technology-based system. Efforts being made by the Centers for Medicare and Medicaid Servies (CMS) and commercial payers (e.g., behavioral health integration and collaborative care programs) to provide support for patients within primary care settings (12) present an interesting opportunity to provide technology-enabled programs tailored to caregivers with behavioral health conditions. We would suggest that CMS and the Center for Medicare and Medicaid Innovation (CMMI) consider mechanisms to more widely disseminate such programs and ensure appropriate reimbursement for these services.

Acknowledgments

We would like to thank the Mindoula case management team—Amy Wilson, Daun Duncan, and Paige Lewis; the AnthroTronix study coordination team—Charlotte Safos, Rita Shewbridge, Marissa Lee, and James Drane.

Funding: The study was supported by the Bright Focus Foundation and the Geoffrey Beene Foundation Alzheimer’s Initiative.

Footnote

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at http://dx.doi.org/10.21037/jhmhp.2018.04.02). AnthroTronix, Inc. is the creator of the DANA software app, and Mindoula Health, Inc. is the creator of the Mindoula virtual case management platform. The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). The study protocol was approved by the AnthroTronix Institutional Review Board (#091614A) and written informed consent was obtained from all patients.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Alzheimer’s Association. Alzheimer’s disease facts and figures. Alzheimers Dement 2016;12:459-509. [Crossref] [PubMed]

- Schulz R, Martire LM. Family caregiving of persons with dementia: Prevalence, health effects, and support strategies. Am J Geriatr Psychiatry 2004;12:240-9. [Crossref] [PubMed]

- Vitaliano PP, Zhang J, Scanlan JM. Is caregiving hazardous to one’s physical health? Psychol Bull 2003;129:946-72. [Crossref] [PubMed]

- Vitaliano PP, Ustundag O, Borson S. Objective and subjective cognitive problems among caregivers and matched non-caregivers. Gerontologist 2017;57:637-47. [PubMed]

- Lathan C, Wallace AS, Shewbridge R, et al. Cognitive Health Assessment and Establishment of a Virtual Cohort of Dementia Caregivers. Dement Geriatr Cogn Dis Extra 2016;6:98-107. [Crossref] [PubMed]

- Coffman I, Resnick HE, Lathan CE. Behavioral health characteristics of a technology-enabled sample of Alzheimer’s caregivers with high caregiver burden. mHealth 2017;3:36. [Crossref] [PubMed]

- Gaynes BN, DeVeaugh-Geiss J, Weir S, et al. Feasibility and diagnostic validity of the M-3 checklist: a brief, self-rated screen for depressive, bipolar, anxiety, and post-traumatic stress disorders in primary care. Ann Fam Med 2010;8:160-9. [Crossref] [PubMed]

- Hébert R, Bravo G, Préville M. Reliability, validity and reference values of the Zarit Burden Interview for assessing informal caregivers of community-dwelling older persons with dementia. Can J Aging 2000;19:494-507. [Crossref]

- Yu L, Buysse DJ, Germain A, et al. Development of short forms from the PROMIS™ sleep disturbance and sleep-related impairment item banks. Behav Sleep Med 2011;10:6-24. [Crossref] [PubMed]

- Talisman N, Kaltman S, Davis K, et al. Case management: a new approach. Psychiatr Ann 2015;45:134-8. [Crossref]

- National Academies of Sciences, Engineering, and Medicine. Families caring for an aging America. Washington, DC: National Academies Press, 2016.

- Press MJ, Howe R, Schoenbaum M, et al. Medicare payment for behavioral health integration. N Engl J Med 2017;376:405-7. [Crossref] [PubMed]

Cite this article as: Lathan CE, Coffman I, Sidel S, Alter C. A pilot virtual case-management intervention for caregivers of persons with Alzheimer’s disease. J Hosp Manag Health Policy 2018;2:16.